Semantic Scholar API - Tutorial

Get Started with Semantic Scholar API

Learn to search for papers and authors, download datasets, and more

Introduction

The Semantic Scholar REST API uses standard HTTP verbs, response codes, and authentication. This tutorial will teach you how to interact with the API by sending requests and analyzing the responses. All code examples are shown in Python. If you prefer a code-free experience, follow along using the Semantic Scholar Postman Collection, which lets you test out the API on Postman, a popular and free API testing platform.

What is an Application Programming Interface (API)?

An API is a structured way for applications to communicate with each other. Applications can send API requests to one another, for instance to retrieve data.

Each API request consists of:

- An API endpoint, which is the URL that requests are sent to. The URL consists of the API’s base URL and the specific endpoint’s resource path (See Figure 1).

- A request method, such as GET or POST. This is sent in the HTTP request and tells the API what type of action to perform.

![a diagram displaying the base url [https://api.semanticscholar.org/graph/v1/] and resource path [/paper/search]](https://cdn.prod.website-files.com/605236bb767e9a5bb229c63c/65553b12d016d1fc01d52f2d_GS_endpointUrlExample.png)

Figure 1. The endpoint for Semantic Scholar’s paper relevance search endpoint.

Each API request may also include:

- Query parameters, which are appended to the end of the URL, after the resource path.

- A request header, which may contain information about the API key being used.

- A request body, which contains data being sent to the API.

After the request is sent, the API will return a response. The response includes a status code indicating whether the request was successful and any requested data. The response may also include requested data.

Common status codes are:

- 200, OK. The request was successful.

- 400, Bad Request. The server could not understand your request. Check your parameters.

- 401, Unauthorized. You're not authenticated or your credentials are invalid.

- 403, Forbidden. The server understood the request but refused it. You don't have permission to access the requested resource.

- 404, Not Found. The requested resource or endpoint does not exist.

- 429, Too Many Requests. You've hit the rate limit, slow down your requests.

- 500, Internal Server Error. Something went wrong on the server’s side.

The Semantic Scholar APIs

Semantic Scholar contains three APIs, each with its own unique base URL:

- Academic Graph API returns details about papers, paper authors, paper citations and references. Base URL: https://api.semanticscholar.org/graph/v1

- Recommendations API recommends papers based on other papers you give it. Base URL: https://api.semanticscholar.org/recommendations/v1

- Datasets API lets you download Semantic Scholar’s datasets onto your local machine, so you can host the data yourself and do custom queries. Base URL: https://api.semanticscholar.org/datasets/v1

See the Semantic Scholar API documentation for more information about each API and their endpoints. The documentation describes how to correctly format requests and parse responses for each endpoint.

How to make requests faster and more efficiently

Heavy use of the API can cause a slowdown for everyone. Here are some tips to avoid hitting rate limit ceilings and slowdowns when making requests:

- Use an API Key. Users without API keys are affected by the traffic from all other unauthenticated users, who share a single API key. But using an individual API key automatically gives a user a 1 request per second rate across all endpoints. In some cases, users may be granted a slightly higher rate following a review. Learn more about API keys and how to request one here.

- Use batch endpoints. Some endpoints have a corresponding batch or bulk endpoint that returns more results in a single response. Examples include the paper relevance search (bulk version: paper bulk search) and the paper details endpoint (batch version: paper batch endpoint). When requesting large quantities of data, use the bulk or batch versions whenever possible.

- Limit “fields” parameters. Most endpoints in the API contain the “fields” query parameter, which allows users to specify what data they want returned in the response. Avoid including more fields than you need, because that can slow down the response rate.

- Download Semantic Scholar Datasets. When you need a request rate that is higher than the rate provided by API keys, you can download Semantic Scholar’s datasets and run queries locally. The Datasets API provides endpoints for easily downloading and maintaining Semantic Scholar datasets. See the How to Download Full Datasets section of the tutorial under Additional Resources for more details.

Example: Request paper details (using Python)

Now we’ll make a request to the paper details endpoint by running Python code. Complete the steps listed under Prerequisites below before proceeding. If you prefer to follow along in Postman, the same request in Postman is located here. For more examples of API requests using Python, see the section Make Calls to the Semantic Scholar API.

Prerequisites:

- Install Python if it is not already on your machine.

- Install pip, Python's package manager, if it is not already on your machine.

According to the Academic Graph API documentation, the paper details endpoint is a GET method and its resource path is /paper/{paper_id}.

![a diagram displaying the base url [https://api.semanticscholar.org/graph/v1/] and resource path [/paper/search]](https://cdn.prod.website-files.com/605236bb767e9a5bb229c63c/66e9d70caf2154892b901a69_paper%20details%20path.png)

Figure 2. Each endpoint's resource path is listed in the API documentation.

When combined with the Academic Graph base URL, the endpoint’s URL is: https://api.semanticscholar.org/graph/v1/paper/{paper_id}

The curly brackets in the resource path indicate that paper_id is a path parameter, which is replaced by a value when the request is sent. Accepted formats for the value of paper_id are detailed in the Path Parameters section of the documentation.

![a diagram displaying the base url [https://api.semanticscholar.org/graph/v1/] and resource path [/paper/search]](https://cdn.prod.website-files.com/605236bb767e9a5bb229c63c/66e9dbb3be85dfbfe383219a_paper%20details%20parameters.png)

Figure 3. Accepted formats are listed in the Path Parameters section.

The Query Parameters section of the documentation only lists a single optional parameter: fields. The fields parameter takes a string of comma-separated field names, which tell the API what information to return in the response.

![a diagram displaying the base url [https://api.semanticscholar.org/graph/v1/] and resource path [/paper/search]](https://cdn.prod.website-files.com/605236bb767e9a5bb229c63c/67003dbebdebc17eb7101eb8_query%20params%20fields.png)

Figure 4. Fields that can be returned in the response are listed in the Response Schema section of Responses.

For our Python request, we'll query the same paper ID given in the documentation’s example. We'll request the paper’s title, the year of publication, the abstract, and the citationCount fields:

import requests

paperId = "649def34f8be52c8b66281af98ae884c09aef38b"

# Define the API endpoint URL

url = f"http://api.semanticscholar.org/graph/v1/paper/{paperId}"

# Define the query parameters

query_params = {"fields": "title,year,abstract,citationCount"}

# Directly define the API key (Reminder: Securely handle API keys in production environments)

api_key = "your api key goes here" # Replace with the actual API key

# Define headers with API key

headers = {"x-api-key": api_key}

# Send the API request

response = requests.get(url, params=query_params, headers=headers)

# Check response status

if response.status_code == 200:

response_data = response.json()

# Process and print the response data as needed

print(response_data)

else:

print(f"Request failed with status code {response.status_code}: {response.text}")

Note that this request is using an API key. The use of API keys is optional but recommended. Learn more about API keys and how to get one here.

We are using the Python Requests library to send the request. So we know the response has a property named status_code that returns the response status. We check the status_code and either print the successfully returned data or the error message.

See the API documentation for how the response is formatted. Each Status Code section expands with further details about the response data that is returned.

![a diagram displaying the base url [https://api.semanticscholar.org/graph/v1/] and resource path [/paper/search]](https://cdn.prod.website-files.com/605236bb767e9a5bb229c63c/66e9dfc4fd5ef4d187cdfa62_paper%20details%20responses.png)

Figure 5. The Responses section describes how responses are formatted.

When the request is successful, the JSON object returned in the response is:

{

"paperId": "649def34f8be52c8b66281af98ae884c09aef38b",

"title": "Construction of the Literature Graph in Semantic Scholar",

"abstract": "We describe a deployed scalable system for organizing published ...",

"year": 2018,

"citationCount": 365

}

See the Make Calls to the Semantic Scholar API section for more Python examples using the paper search, paper recommendations, and authors endpoints.

Additional Resources

Pagination

Pagination is a technique used in APIs to manage and retrieve large sets of data in smaller, manageable chunks. This is particularly useful when dealing with extensive datasets to improve efficiency and reduce the load on both the client and server.

Some Semantic Scholar endpoints, like paper relevance search, require the use of the limit and offset parameters to handle pagination:

- Limit: Specifies the maximum number of items (e.g., papers) to be returned in a single API response. For example, in the request https://api.semanticscholar.org/graph/v1/paper/search?query=halloween&limit=3, the limit=3 indicates that the response should include a maximum of 3 papers.

- Offset: Represents the starting point from which the API should begin fetching items. It helps skip a certain number of items. For example, if offset=10, the API will start retrieving items from the 11th item onward.

Other endpoints, like paper bulk search, require the use of the token parameter to handle pagination:

- Token: A “next” token or identifier provided in the response, pointing to the next set of items. It allows fetching the next page of results.

In either case, the client requests the API for the first page of results. The API responds with a limited number of items. If there are more items to retrieve, the client can use the offset parameter or the next token in subsequent requests to get the next page of results until all items are fetched. This way, pagination allows clients to retrieve large datasets efficiently, page by page, based on their needs.

Examples using search query parameters

Semantic Scholar’s paper bulk search supports a variety of operators that enable advanced filtering and precise specifications in search queries. All keywords in the search query are matched against words in the paper’s title and abstract. Refer to the API Documentation for all supported operators. Below are examples of varying complexity to help you get started.

Example 1.

((cloud computing) | virtualization) +security -privacyMatches papers containing the words "cloud” and “computing", OR the word "virtualization" in their title or abstract. The paper title or abstract must also include the term "security" but should exclude the word "privacy". For example, a paper with the title "Ensuring Security in Cloud Computing Environments" could be included, unless its abstract contains the word “privacy”.

Example 2.

"red blood cell" + artificial intelligenceMatches papers where the title or abstract contains the exact phrase “red blood cell” along with the words “artificial” and “intelligence”. For example, a paper with the title "Applications of Artificial Intelligence in Healthcare" would be included if it also contained the phrase “red blood cell” in its abstract.

Example 3.

fish*Matches papers where the title or abstract contains words with “fish” in their prefix, such as “fishtank”, “fishes”, or “fishy”. For example a paper with the title "Ecology of Deep-Sea Fishes" would be included.

Example 4.

bugs~3Matches papers where the title or abstract contains words with an edit distance of 3 from the word “bugs”, such as “buggy”, “but”, "buns", “busg”, etc. An edit is the addition, removal, or change of a single character.

Example 5.

“blue lake” ~3Matches papers where the title or abstract contains phrases with up to 3 terms between the words specified in the phrase. For example, a paper titled “Preserving blue lakes during the winter” or with an abstract containing a phrase such as “blue fishes in the lake” would be included.

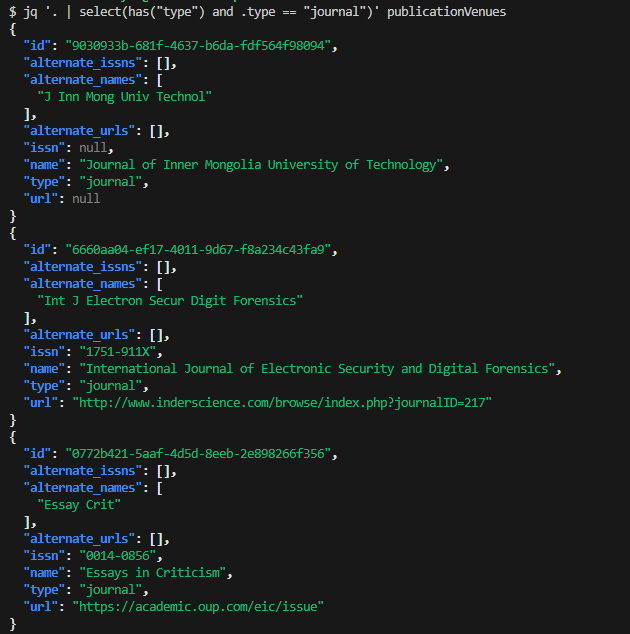

How to download full datasets

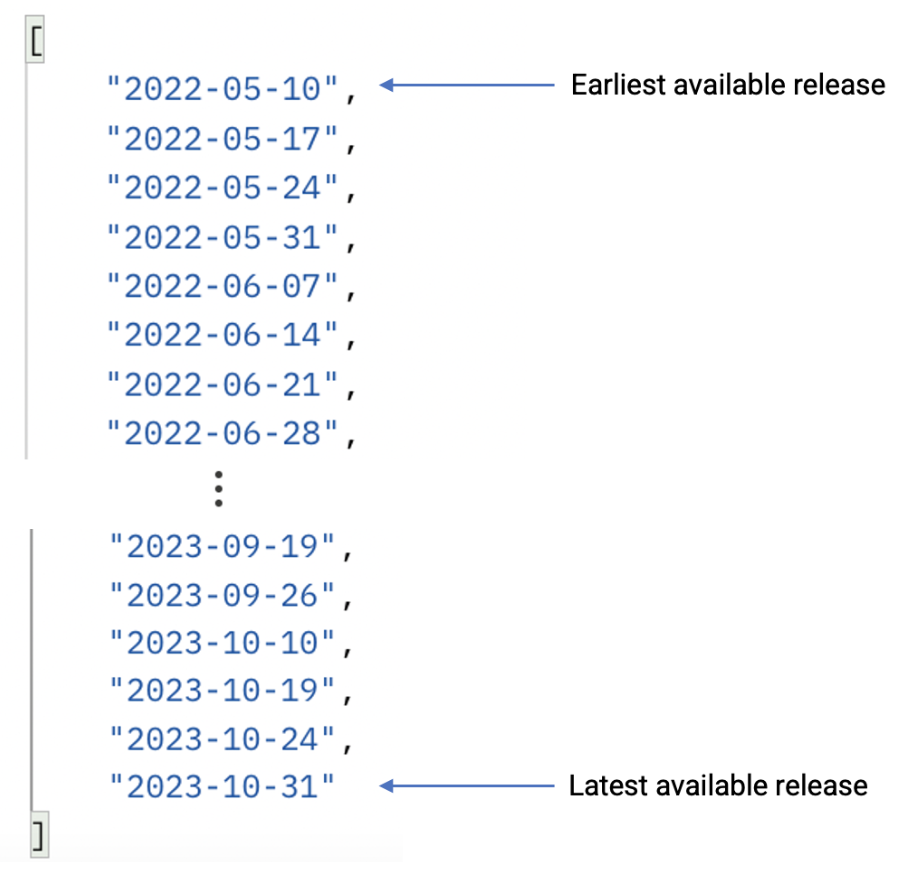

Semantic Scholar datasets contain data on papers, authors, abstracts, embeddings, and more. Datasets are grouped by releases, and each release is a snapshot of the datasets at the time of that release date. Make requests to the Datasets API to see the list of available release dates, to list the datasets contained in a given release, and to download links to datasets.

All Semantic Scholar datasets are delivered in JSON format.

Step 1: See all release dates

Use the list of available releases endpoint to see all dataset release dates.

import requests

# Define base URL for datasets API

base_url = "https://api.semanticscholar.org/datasets/v1/release/"

# To get the list of available releases make a request to the base url. No additional parameters needed.

response = requests.get(base_url)

# Print the response data

print(response.json())

The response is a list of release dates, which contain all releases through the date the request was made:

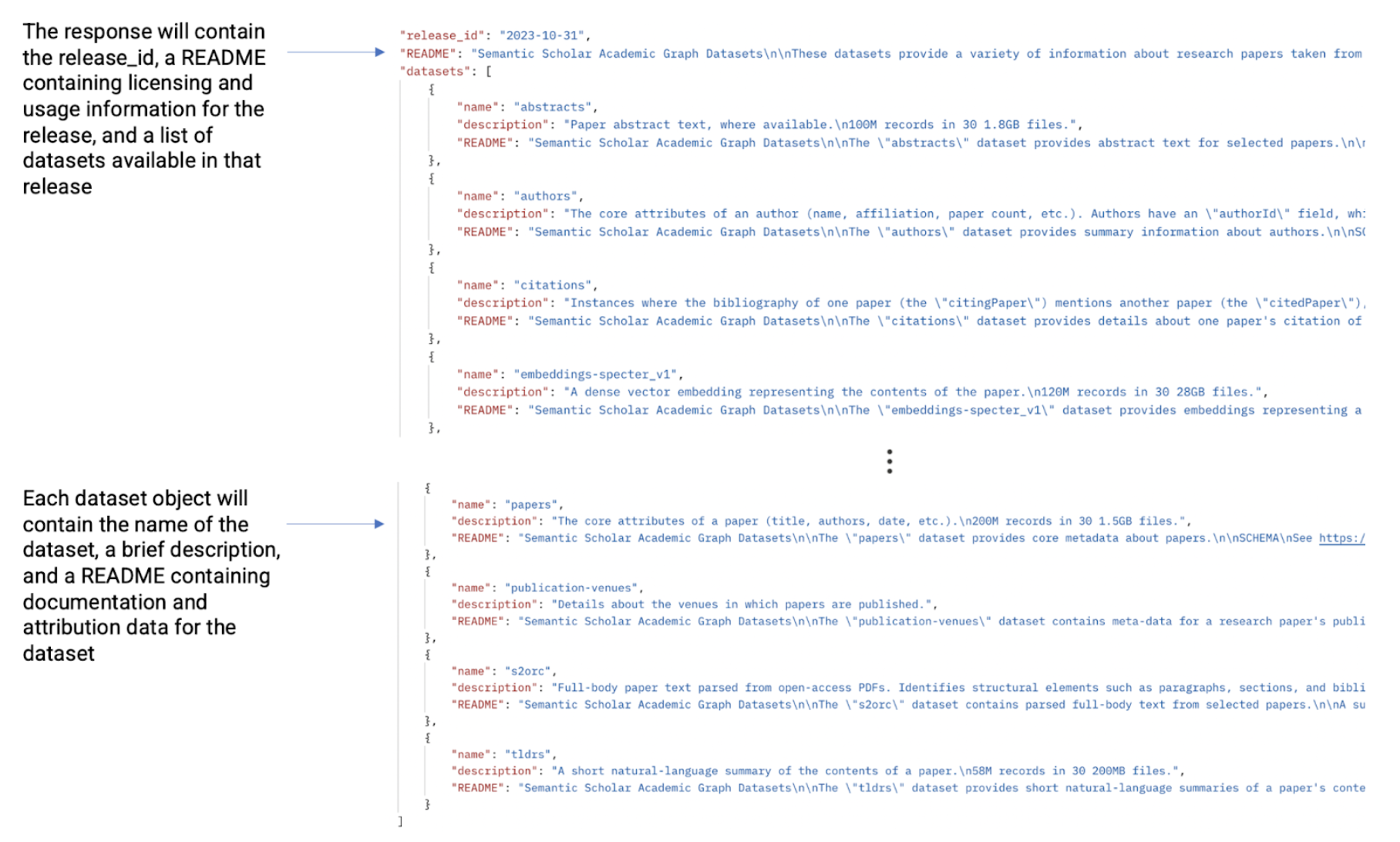

Step 2: See all datasets for a given release date

Use the list of datasets in a release endpoint to see all datasets contained in a given release. The endpoint takes the release_id, which is simply the release date, as a query parameter. The release_id can also be set to “latest” instead of the actual date value to retrieve datasets from the latest release.

import requests

base_url = "https://api.semanticscholar.org/datasets/v1/release/"

# Set the release id

release_id = "2023-10-31"

# Make a request to get datasets available the latest release

response = requests.get(base_url + release_id)

# Print the response data

print(response.json())

Step 3: Get download links for datasets

Use the download links for a dataset endpoint to get download links for a specific dataset at a specific release date. This step requires the use of a Semantic Scholar API key.

import requests

base_url = "https://api.semanticscholar.org/datasets/v1/release/"

# This endpoint requires authentication via api key

api_key = "your api key goes here"

headers = {"x-api-key": api_key}

# Set the release id

release_id = "2023-10-31"

# Define dataset name you want to download

dataset_name = 'papers'

# Send the GET request and store the response in a variable

response = requests.get(base_url + release_id + '/dataset/' + dataset_name, headers=headers)

# Process and print the response data

print(response.json())

The response contains the dataset name, description, a README with license and usage information, and temporary, pre-signed download links for the dataset files:

How to update datasets with incremental diffs

The incremental diffs endpoint in the Datasets API allows users to get a comprehensive list of changes—or “diffs”—between any two releases. Full datasets can be updated from one release to another to avoid downloading and processing data that hasn't changed. This endpoint requires the use of a Semantic Scholar API key.

This endpoint returns a list of all the "diffs" required to catch a given dataset up from the start release date to the end release date, with each “diff” object containing only the changes from one release to the next sequential release.

Each "diff" object itself contains two lists of files: an "update files" list and a "delete files" list. Records in the "update files" list need to be inserted or replaced by their primary key. Records in the "delete files" list should be removed from your dataset.

import requests

# Set the path parameters

start_release_id = "2023-10-31"

end_release_id = "2023-11-14"

dataset_name = "authors"

# Set the API key. For best practice, store and retrieve API keys via environment variables

api_key = "your api key goes here"

headers = {"x-api-key": api_key}

# Construct the complete endpoint URL with the path parameters

url = f"https://api.semanticscholar.org/datasets/v1/diffs/{start_release_id}/to/{end_release_id}/{dataset_name}"

# Make the API request

response = requests.get(url, headers=headers)

# Extract the diffs from the response

diffs = response.json()['diffs']

print(diffs)

Tips for working with downloaded datasets

Explore the following sections for inspiration on leveraging your downloaded data. Please be aware that the tools, libraries, and frameworks mentioned below are not a comprehensive list and their performance will vary based on the size of your data and machine’s capabilities. They are all external tools with no affiliation to Semantic Scholar, and are simply offered as suggestions to facilitate your initial exploration of our data.

Command line tools

Perhaps the simplest way to view your downloaded data is via the command line through commands like more and tools like jq.

1. The more command

You can use the more command without installing any external tool or library. This command is used to display the contents of a file in a paginated manner and lets you page through the contents of your downloaded file in chunks without loading up the entire dataset. It shows one screen of text at a time and allows you to navigate through the file using the spacebar (move forward one screen) and Enter (move forward one line) commands.

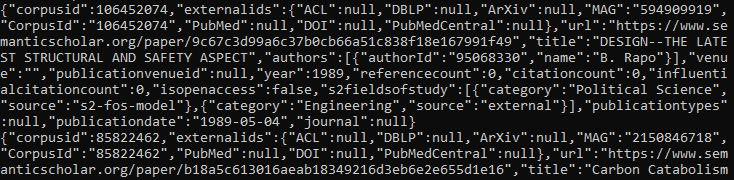

Example: You downloaded the papers dataset, and renamed the file to “papersDataset”. Use the “more papersDataset” command to view the file:

2. The jq tool

jq is a lightweight and flexible command-line tool for exploring and manipulating JSON data. With jq, you can easily view formatted json output, select and view specific fields, filter data based on conditions, and more.

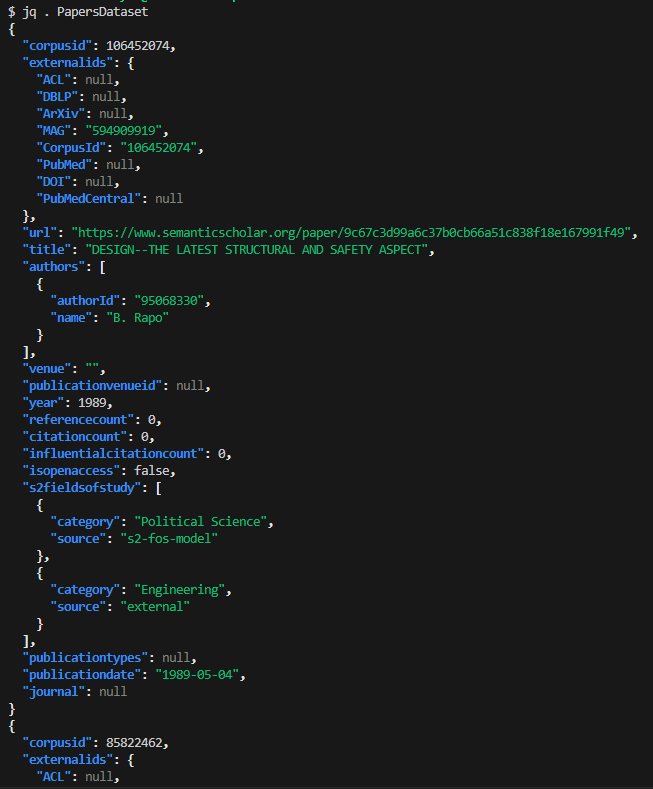

Example: You downloaded the papers dataset, and renamed the file to “papersDataset”. The jq command to format output is jq ‘.’ <file-name>, so use the jq . papersDataset command to view the formatted file:

Example: You want to filter publication venues that are only journals. You can use jq to filter json objects by a condition with the command jq ‘ . | select(has(“type”) and .type == “journal”)’ publicationVenues

Python Pandas library

Pandas is a powerful and easy-to-use data analysis and manipulation library available in Python. Using Pandas, you can effortlessly import, clean, and explore your data. One of the key structures in Pandas is a DataFrame, which can be thought of as a table of information, akin to a spreadsheet with rows and columns. Each column has a name, similar to a header in Excel, and each row represents a set of related data. With a DataFrame, tasks like sorting, filtering, and analyzing your data are straightforward. Now we will see how to leverage basic Pandas functions to view and explore our Semantic Scholar data in a DataFrame.

Example: The head function. In Pandas you can use the head( ) function to view the initial few rows of your dataframe.

import pandas as pd

# Read JSON file into Pandas DataFrame. The ‘lines’ parameter indicates that our file contains one json object per line

df = pd.read_json('publication venues dataset', lines=True)

# Print the first few rows of the DataFrame

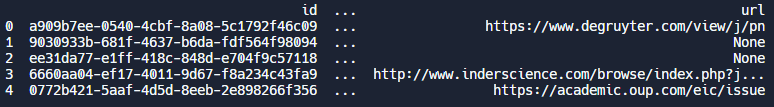

print(df.head())The output is below. You will notice that this is a very wide dataframe, where each column represents a field in our json object (e.g. id, name, issn, url, etc.). By default pandas only shows the first and last columns. To view all the columns, you can configure the pandas display settings before printing your output, with pd.set_option('display.max_columns', None)

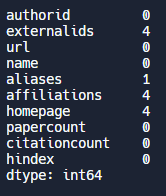

Example: The count function. We can use the count( ) function to count the number of rows that have data in them (e.g. not null). This can be useful to test the quality of your dataset.

# Display count of non-null values for each column

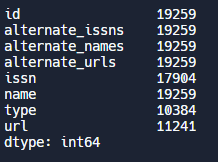

print(df.count())Output:

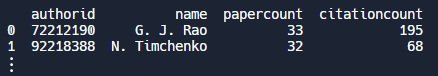

Example: Filtering. We can filter our data by specifying conditions. For example, let’s assume we have loaded our authors' dataset into a dataframe, and want to filter by authors who have written at least 5 papers and been cited at least 10 times. After applying this filter, let's select and display only the authorid, name, papercount, and citationcount fields.

#filter dataframe by authors who have more than 5 publications and have been cited at least 10 times

df = df[(df.papercount >= 5) & (df.citationcount >= 10)]

# Select and print a subset of the columns in our filtered dataframe

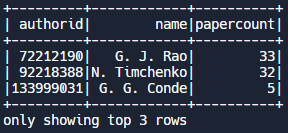

print(df[['authorid', 'name', 'papercount', 'citationcount']])Output:

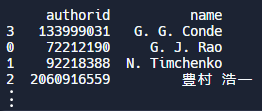

Example: Sorting. Pandas offers a variety of sorting functions to organize our data. In the example below, we use the sort_values( ) function to sort the dataframe by the “name” column and only display the authorid and name columns. The default is ascending order, so in this case our output will list authors in alphabetical order. e can filter our data by specifying conditions. For example, let’s assume we have loaded our authors' dataset into a dataframe, and want to filter by authors who have written at least 5 papers and been cited at least 10 times. After applying this filter, let's select and display only the authorid, name, papercount, and citationcount fields.

#Let's sort our authors in alphabetical order

df = df.sort_values(by='name')Output:

Example: Check for missing values. Let’s say we want to assess the quality of our data by checking for missing (null) values. We can count how many missing values we have by using the isnull() and sum() functions.

# Count and print the number of missing values for each author attribute

print(df.isnull().sum())Output:

Apache Spark (Python examples)

Apache Spark is a fast and powerful processing engine that can analyze large-scale data faster than traditional methods via in-memory caching and optimized query execution. Spark offers APIs for a variety of programming languages, so you can utilize its capabilities regardless of the language you are coding in. In our examples we will showcase the Spark Python API, commonly known as PySpark.

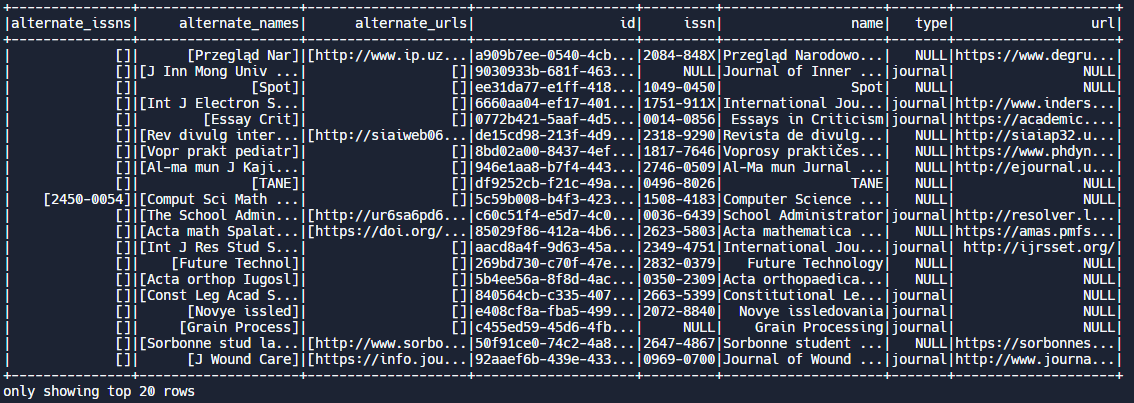

Example: The show function. PySpark’s show( ) function is similar to print( ) or head( ) in pandas and will display the first few rows of data. Let’s load up our publication venues data into a PySpark DataFrame and see how it looks:

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName("dataset_exploration").getOrCreate()

# Read the dataset file named 'publication venues dataset' into a PySpark DataFrame. Depending on the directory you are working from you may need to include the complete file path.

df = spark.read.json("publication venues dataset")

# Display the first few rows

df.show()Output:

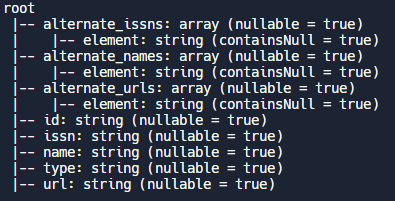

Example: The printSchema function. PySpark offers a handy printSchema( ) function if you want to explore the structure of your data

# Display the object schema

df.printSchema()Output:

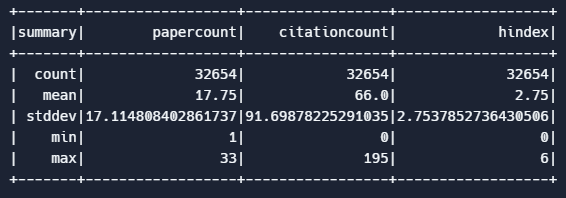

Example: Summary statistics. PySpark offers a handy describe( ) function to delve into and display summary statistics for the specified columns in our dataset. In this example we describe the papercount, citationcount, and orderBy attributes of our author data. In the results we can see the average papercount of authors in this dataset, along with their average citationcount, hindex, and other common statistical measures.

df.describe(["papercount", "citationcount", "hindex"]).show()Output:

Example: Sorting. We can call the orderBy( ) function and specify the column we want to sort by, in this case papercount. We also call the desc() function to sort in descending order (from highest to lowest papercount). We also only want to display the authorid, name, and papercount fields, and display the top 3 records.

df = df.orderBy(col("papercount").desc())

df.select("authorid", "name", "papercount").show(3)Output:

MongoDB

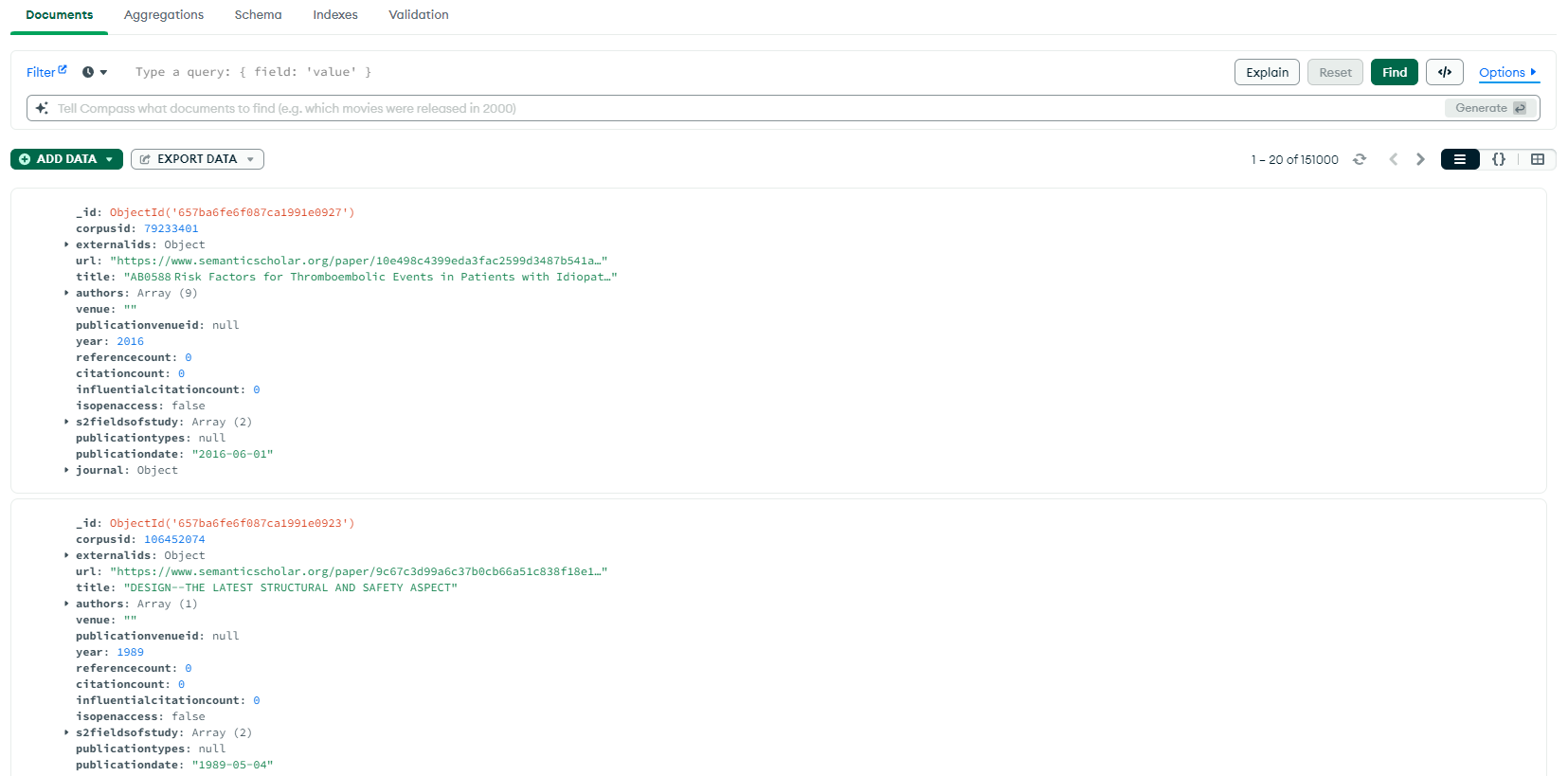

MongoDB is a fast and flexible database tool built for exploring and analyzing large scale datasets. Think of it as a robust digital warehouse where you can efficiently organize, store, and retrieve large volumes of data. In addition, MongoDB is a NoSQL database that stores data in a flexible schema-less format, scales horizontally, supports various data models, and is optimized for performance. MongoDB offers both on-premise and fully managed cloud options (Atlas) and can be accessed via the Mongo shell or a GUI (known as Mongo Compass). You can check out our guide on setting up Mongo if you need help getting started. In the example below, we have imported a papers dataset into a Mongo Atlas cluster and show you how to leverage the Mongo Compass GUI to view and explore your data.

Once you have imported your data, you can view it via Compass as shown in the example below. You can leverage the Compass documentation to discover all its capabilities. We have listed some key items on the user interface to get you acquainted:

- Data can be viewed in the default list view (shown below), object view, or table view by toggling the button on the upper right hand corner. In the list view, each ‘card’ displays a single record, or in this case a paper object. Notice that MongoDB appends its own ID, known as ObjectId to each record.

- You can filter and analyze your data using the filter pane at the top of the screen, and click on the Explain button to see how your filters were applied to obtain your result set. Note that since Mongo is a NoSQL database, it has a slightly different query language from SQL to use for filtering and manipulation.

- The default tab is the Documents tab where you can view and scroll through your data. You can also switch to the Aggregations tab to transform, filter, group, and perform aggregate operations on your dataset. In the Schema tab, Mongo provides an analysis of the schema of your dataset. When you click on the Indexes tab, you will find that the default index for searches is Mongo’s ObjectId. If you believe you will perform frequent searches using another attribute (e.g. corpusid), you can add an additional index to optimize performance.

- You can always add more data to your dataset via the green Add Data button right under the filter query bar

Setting Up MongoDB

You have the option of installing MongoDB onto your machine, or using their managed database-as-a-service option on the cloud, otherwise known as Atlas. Once you set up your database, you can download the GUI tool (Mongo Compass) and connect it to your database to visually interact with your data. If you are new to mongo and want to just explore, you can setup a free cluster on Atlas with just a few easy steps:

Set Up a Free Cluster on MongoDB Atlas:

- Sign Up/Login:

1.1. Visit the MongoDB Atlas website.

1.2. Sign up for a new account or log in if you already have one. - Create a New Cluster:

2.1. After logging in, click on "Build a Cluster."

2.2. Choose the free tier (M0) or another desired plan.

2.3. Select your preferred cloud provider and region. - Configure Cluster:

3.1. Set up additional configurations, such as cluster name and cluster tier.

3.2. Click "Create Cluster" to initiate the cluster deployment. It may take a few minutes.

Connect to MongoDB Compass:

- Download and Install MongoDB Compass:

1.1. Download MongoDB Compass from the official website.

1.2. Install the Compass application on your computer. - Retrieve Connection String:

2.1. In MongoDB Atlas, go to the "Clusters" section.

2.2. Click on "Connect" for your cluster.

2.3. Choose "Connect Your Application."

2.4. Copy the connection string. - Connect Compass to Atlas:

3.1. Open MongoDB Compass.

3.2. Paste the connection string in the connection dialog.

3.3. Modify the username, password, and database name if needed.

3.4. Click "Connect."

Import Data:

- Create a Database and Collection:

1.1. In MongoDB Compass, navigate to the "Database" tab.

1.2. Create a new database and collection by clicking "Create Database" and "Add My Own Data." - Import Data:

2.1. In the new collection, click "Add Data" and choose "Import File."

2.2. Select your JSON or CSV file containing the data.

2.3. Map fields if necessary and click "Import." - Verify Data:

3.1. Explore the imported data in MongoDB Compass to ensure it's displayed correctly.

Now, you have successfully set up a free cluster on MongoDB Atlas, connected MongoDB Compass to the cluster, and imported data into your MongoDB database. This process allows you to start working with your data using MongoDB's powerful tools.

TIP: We recommend checking the Mongo website for the latest installation instructions and FAQ in case you run into any issues.

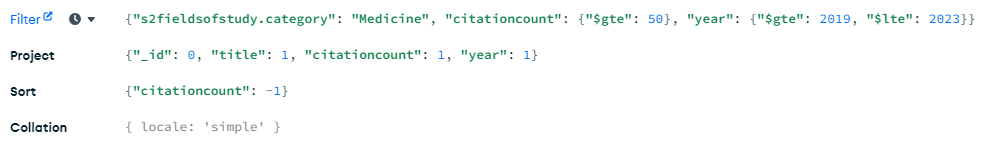

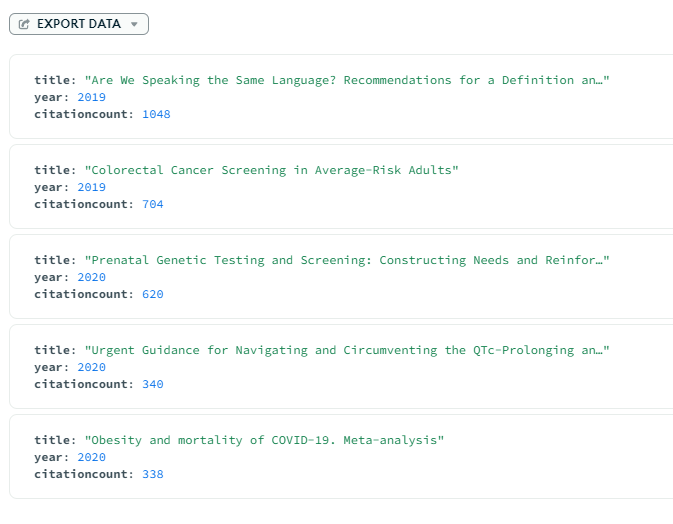

Example: Querying, Filtering, and Sorting. Using the Mongo Compass GUI we can filter and sort our dataset per our needs. For example, let's see which papers in Medicine were cited the most in the last 5 years, and exclude any papers with under 50 citations. In the project field we choose which fields we would like to display in the output, and we sort in descending order by citationcount

{

's2fieldsofstudy.category': 'Medicine',

'citationcount': {

'$gte': 50

},

'year': {

'$gte': 2019,

'$lte': 2023

}

}

Output:

Working with Multiple Datasets

Oftentimes we may want to combine information from multiple datasets to gather insights. Consider the following example:

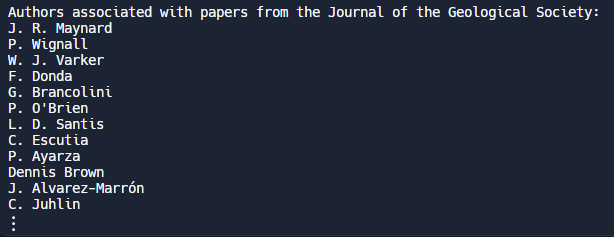

Use case: Let’s delve into a publication venue, such as the “Journal of the Geological Society”, and learn more about the papers that have been published in it. Perhaps we would like to gather the names of authors who have published a paper in this journal, but only those whose papers have been cited at least 15 times. We can combine information from the publication venues dataset and the papers dataset to find the authors that meet this criteria. To do this, we can load our datasets into pandas dataframes and retrieve the publication venue ID associated with the “Journal of the Geological Society” from the publication venues dataset. Then we can search the papers dataset for papers that have a citationcount of at least 15 and are tagged to that venue ID. Finally we can collect the names of authors associated with each of those papers that met our criteria. From this point you can explore other possibilities, such as viewing other papers published by those authors, checking out their homepage on the Semantic Scholar website, and more.

Python Example:

import pandas as pd

# Create Pandas DataFrames

papers_df = pd.read_json('papersDataset', lines=True)

venues_df = pd.read_json('publicationVenuesDataset', lines=True)

# Find the venue id for our publication venue of interest - "Journal of the Geological Society"

publication_venue_id = venues_df.loc[venues_df["name"] == "Journal of the Geological Society", "id"].values[0]

# Filter papers based on the venue id with a citation count of at least 15

filtered_geology_papers = papers_df.loc[

(papers_df["publicationvenueid"] == publication_venue_id) & (papers_df["citationcount"] >= 15)

]

# Traverse the list of authors for each paper that met our filter criteria and collect their names into a list

author_names = []

for authors_list in filtered_geology_papers["authors"]:

author_names.extend(author["name"] for author in authors_list)

# Print the resulting author names, with each name on a new line

print("Authors associated with papers from the Journal of the Geological Society:")

print(*author_names, sep="\n")Output: